I dunno. I think the standard is Word or some other word processors like open office (I'll have to check); but Word is the standard. Indesign handles importing the Word footnotes pretty well.Let me know if there is something else that might help. I'm sure you're much rather your time be spent doing something more productive than a repetitive formatting or parsing. Do you do all the footnotes in Word and then import a Word document into InDesign?

Word is a good tool for being able to see some things visually but it's hard to automate (maybe David can help with some macros). But if InDesign can import a different format then that might be helpful.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I have a dream. The complete Puritan Corpus updated.

- Thread starter davejonescue

- Start date

- Status

- Not open for further replies.

davejonescue

Puritan Board Junior

I can only speak from personal experience. But even using the EEBO-TCP text as is within the Index I have been working on, I can get lost in dissertations by these authors speaking so deeply about a subject that just hits the heart. There is no need to "bury" these texts, and only resurrect them when they can be completely and precisely dealt with. Because what is more of an injustice, to leave them in the ground, forgotten; or alive and read even with the scars of imperfection? We have to understand that there are, at least in my linking, over 5,000 texts from Puritans and Non-Conformists. Many want to read them, but many people do not have the time or expertise to do the work. If this process can automate a 90% correction of EEBO-TCP texts, even omitting the footnotes; this is a 90% improvement on a resource that is already offered to the Christian community for free via EEBO-TCP. I dont see an injustice at all. People can still search out and format and publish all the Puritan works they like; many are in the public domain. But instead of having to wait on the very limited amount of Puritan publishers to be able to edit, correct, and publish what they can, almost the entire corpus can already be available in a very readable state, to the world, for free. This discourse lets us see the technology is here. And, as time progresses, and technology progresses, greater steps can be taken to improve and take it to the next level.Logan, Dave, When you start out with the premise, all the Latin text, Greek text, Hebrew text, and marginal notes, comments and headings, which can be an extensive part of a work, will be omitted, and say the rest of the text itself may vary with missing words and sentences the typists omitted in error, what sort of collection is this? The question is, is it good enough and fairly represents the author's original work? If this were print, there is no question no one would ever put texts out like this; they would be bad texts; it does an injustice to the authors' works. App based? I hate to see the debasing of expectations of good texts of an author's work. Maybe in some cases with an uncomplicated text this will work; in other cases surely not; but that undercuts the mass processing idea and treating them all the same as far as not doing any more than using what EEB has with all its faults and intentional omissions.

Logan

Puritan Board Graduate

Logan, Dave, When you start out with the premise, all the Latin text, Greek text, Hebrew text, and marginal notes, comments and headings, which can be an extensive part of a work, will be omitted, and say the rest of the text itself may vary with missing words and sentences the typists omitted in error, what sort of collection is this?

I see this as a progression of accessibility.

Obviously the most accurate is the original printed editions. Those are virtually inaccessible.

Next is the PDFs, which are more accessible but not particularly portable or versatile.

Next is EEBO, where text can actually be searchable at the cost of some of the original accuracy.

Last would be this endeavor David is working on, which would have the most accessibility: better searchability, better portability. More accessibility than EEBO at the cost of some of the more scholarly details.

Obviously the ideal would be hand-proofing and correcting every single one against the originals, but I'd see this as a good base text effort for someone who wants to do that. As David said, this would be freely available with disclaimers. If it's useful to someone, great. If not, they still have the option of going back up the chain.

davejonescue

Puritan Board Junior

Well said, and agree.I see this as a progression of accessibility.

Obviously the most accurate is the original printed editions. Those are virtually inaccessible.

Next is the PDFs, which are more accessible but not particularly portable or versatile.

Next is EEBO, where text can actually be searchable at the cost of some of the original accuracy.

Last would be this endeavor David is working on, which would have the most accessibility: better searchability, better portability. More accessibility than EEBO at the cost of some of the more scholarly details.

Obviously the ideal would be hand-proofing and correcting every single one against the originals, but I'd see this as a good base text effort for someone who wants to do that. As David said, this would be freely available with disclaimers. If it's useful to someone, great. If not, they still have the option of going back up the chain.

Logan

Puritan Board Graduate

I did this as well. Just checked my word swap list, and I have about 7000 words so far.

I bet if we compiled our lists, it would be pretty big. If anyone wants to do this, let me know.

I would love to see your list if you'd be willing to share. It might come in handy for future projects.

Logan

Puritan Board Graduate

Thank you so much for your help, this is truly a blessing. I will get working on that spreadsheet beginning today. I think I have a way to handle the multiple text spelling issue, that is, if we can keep all the words that were replaced, look over them and see which words are duplicated, we can then go into a program like Word (sorry I'm not stuck to Word its just all I know) and auto find-highlight those words, and correct them in context. But again, thank you so much, and I will let you know when I have that spreadsheet done.

I'm not familiar with Zotero but is there a way to export your library? If there was some way to export it then it might be easy to generate a list of authors, titles, and links automatically.

I can only speak from personal experience. But even using the EEBO-TCP text as is within the Index I have been working on, I can get lost in dissertations by these authors speaking so deeply about a subject that just hits the heart. There is no need to "bury" these texts, and only resurrect them when they can be completely and precisely dealt with. Because what is more of an injustice, to leave them in the ground, forgotten; or alive and read even with the scars of imperfection? We have to understand that there are, at least in my linking, over 5,000 texts from Puritans and Non-Conformists. Many want to read them, but many people do not have the time or expertise to do the work. If this process can automate a 90% correction of EEBO-TCP texts, even omitting the footnotes; this is a 90% improvement on a resource that is already offered to the Christian community for free via EEBO-TCP. I dont see an injustice at all. People can still search out and format and publish all the Puritan works they like; many are in the public domain. But instead of having to wait on the very limited amount of Puritan publishers to be able to edit, correct, and publish what they can, almost the entire corpus can already be available in a very readable state, to the world, for free. This discourse lets us see the technology is here. And, as time progresses, and technology progresses, greater steps can be taken to improve and take it to the next level.

Would there be a way perhaps of flagging where Latin/Greek/Hebrew and footnotes/margin notes/headings etc had been omitted. So that someone could take advantage of the greater accessibility but if they needed something closer to the original they would at least have some indicator where to refer back.I see this as a progression of accessibility.

Obviously the most accurate is the original printed editions. Those are virtually inaccessible.

Next is the PDFs, which are more accessible but not particularly portable or versatile.

Next is EEBO, where text can actually be searchable at the cost of some of the original accuracy.

Last would be this endeavor David is working on, which would have the most accessibility: better searchability, better portability. More accessibility than EEBO at the cost of some of the more scholarly details.

Obviously the ideal would be hand-proofing and correcting every single one against the originals, but I'd see this as a good base text effort for someone who wants to do that. As David said, this would be freely available with disclaimers. If it's useful to someone, great. If not, they still have the option of going back up the chain.

davejonescue

Puritan Board Junior

Within the text itself there is already a marker that looks something like <non-latin-text> if I am not mistaken. A cleaner way to display these omissions would be not to cut out the indicator of Greek or Hebrew entirely, but change the former to something like <H-or-G omitted> when replacing the misspellings. All Latin text is displayed, though possibly spelled incorrectly. But I a with you, I would rather have some indicator something was there, because if not, it can make the text seem like gibberish.Would there be a way perhaps of flagging where Latin/Greek/Hebrew and footnotes/margin notes/headings etc had been omitted. So that someone could take advantage of the greater accessibility but if they needed something closer to the original they would at least have some indicator where to refer back.

Last edited:

davejonescue

Puritan Board Junior

I will check on that, but that is something I wanted to ask you about. I am already on my 4,200 or so work into Word. Within Word I can get rid of the lines in the middle of the text, and do away with the letters in the text that are used for hyperlinks for footnotes, as well as the page number in the middle of the text using the "find/replace" feature. Do you think it better to simply make RTF, TXT, HTML's out of the DOC's having took that stuff out, or will it be possible to do so the way you have set up? The reason I am asking is the footnotes may be included as miscellaneous letters scattered all over the texts, and those lines in the text are annoying. Since I have this many text already transferred into Word, should I just take advantage of being able to remove them, then I can give you all of the DOC's and possibly run a code to auto change the DOC's to RTF, TXT, etc, instead of downloading them from EEBO-TCP? But please let me know what works best, you are way smarter at this stuff than me.I'm not familiar with Zotero but is there a way to export your library? If there was some way to export it then it might be easy to generate a list of authors, titles, and links automatically.

Another question is; is there a way to preserve the index markers I have created within the title of the files? In the Index, each author has a distinct marker, like Richard Baxter is P-RB2, and the 20th work from Richard Baxter is P-RB2-20. The reason I ask this, is if we can update these EEBO-TCP text, this exponentially increases the viability of using the open-source DocFetcher to create a portable, fully searchable flash-drives of these works, that missionaries can use overseas, or students and pastors can carry in their pockets as a mobile resource. What makes it even more viable, is DocFetcher portable is said, if I am not mistaken, to work on Windows, Mac, and Linux systems. I would plan for this to be a free resource, available to all; and a simple downloadable, preinstalled program that all someone would need to do is put on a flash-drive and they are ready to go.

I am not trying to do 100 things at once, lol; but just adding those index markers to the titles, if possible, makes it so much easier when in the index because you can see the difference between authors; and when dealing with 5,000+ works, that can make a huge difference.

Again, thank you so much for what you have done thus far. As far as exporting the Zotero library, I can see, but I think one issue has to do with author generating the author. The authors are not automatically linked, but I had to manually separate them as I was linking the collection.

davejonescue

Puritan Board Junior

Logan, I am also keeping an eye towards the inevitable "master list" of misspelled words. I completely understand that the conversion of words can be automated using your script; but can the accumulation of the words that need to be changed be automated using your method? If not, the original macro (and no I am not just trying to use Word, lol) runs through all the DOC's and creates a list of misspelled words within them, which would enable a complete "master list" if all the docs go through the macro. Now it would be awesome if your method can hunt down the misspelled words, and if so please disregard, just wondering? Because if not, and since I have so many already in Word, it may be better to wait the month or so until I complete the list, so that we have the Word docs to macro and thus to create the "master list" for conversion. But just let me know. I have no idea the capabilities of running script, or the power of Python or anything like that. Just let me know which way is most efficient and thats what I will do.I'm not familiar with Zotero but is there a way to export your library? If there was some way to export it then it might be easy to generate a list of authors, titles, and links automatically.

edit...please disregard, I went back and saw where you said you can automate the misspelled word collection.

Last edited:

davejonescue

Puritan Board Junior

It gave me these options to export. Please let me know which, if any works best for you.I'm not familiar with Zotero but is there a way to export your library? If there was some way to export it then it might be easy to generate a list of authors, titles, and links automatically.

Attachments

Logan

Puritan Board Graduate

It gave me these options to export. Please let me know which, if any works best for you.

Try CSV and see what that gives you.

Logan

Puritan Board Graduate

I will check on that, but that is something I wanted to ask you about. I am already on my 4,200 or so work into Word. Within Word I can get rid of the lines in the middle of the text, and do away with the letters in the text that are used for hyperlinks for footnotes, as well as the page number in the middle of the text using the "find/replace" feature. Do you think it better to simply make RTF, TXT, HTML's out of the DOC's having took that stuff out, or will it be possible to do so the way you have set up?

I guess the short answer is that I'm confident that manipulating html files through scripts can be accomplished. So hyperlinks and page links can be automatically removed. However, if you're 4,200 documents in and are happy with what you have and it works with Logos then that's great too.

Does Logos accept html files as personal documents?

The reason I am asking is the footnotes may be included as miscellaneous letters scattered all over the texts, and those lines in the text are annoying. Since I have this many text already transferred into Word, should I just take advantage of being able to remove them, then I can give you all of the DOC's and possibly run a code to auto change the DOC's to RTF, TXT, etc, instead of downloading them from EEBO-TCP?

Sure, we can explore options. I'm not going to be able to devote tons of time but I'm happy to help if I can.

Another question is; is there a way to preserve the index markers I have created within the title of the files? In the Index, each author has a distinct marker, like Richard Baxter is P-RB2, and the 20th work from Richard Baxter is P-RB2-20. The reason I ask this, is if we can update these EEBO-TCP text, this exponentially increases the viability of using the open-source DocFetcher to create a portable, fully searchable flash-drives of these works, that missionaries can use overseas, or students and pastors can carry in their pockets as a mobile resource. What makes it even more viable, is DocFetcher portable is said, if I am not mistaken, to work on Windows, Mac, and Linux systems. I would plan for this to be a free resource, available to all; and a simple downloadable, preinstalled program that all someone would need to do is put on a flash-drive and they are ready to go.

I'm not familiar with DocFetcher but I'm pretty confident that the answer is "yes". Where are you placing these index markers? Just in the file titles? If so then yes, that's definitely possible to retain.

That’s the good thing with Logan’s program- misspellings can be added and the program reran.

Indeed, if I correct my code to actually deal with whole words instead of substrings (my bad!). But yes, one should be able to run against the master list as many times as you want and correct the files even after the fact (with some slight modification). Alex sent me his word replacement list and it's going to be super helpful.

davejonescue

Puritan Board Junior

Hello Logan. Exporting the Zotero Library in CSV almost got us there. The problem now is some of the links have the author, some dont; and this had to do with whether I tried to "take a picture" of the webpage or not. I was doing that with the first few hundred links until I realized I just needed the links and not a copy of the webpage. What I can do though, since Zotero gives me an option, I can go in it, where it is neatly situated, and add the author before export, to the links that do not have one, so that when I export the CSV, the author is included. This should only take me a week or so, maybe two, but it will make for a much smoother collection, as each work will have the authors name included. I believe it will be much more of a pain to try and backtrack, after downloading the works, and figure out who they are from, then re-title them to include the author. Also, in the same process I can add the index marker to the title, thus killing two birds with one stone.I guess the short answer is that I'm confident that manipulating html files through scripts can be accomplished. So hyperlinks and page links can be automatically removed. However, if you're 4,200 documents in and are happy with what you have and it works with Logos then that's great too.

Does Logos accept html files as personal documents?

Sure, we can explore options. I'm not going to be able to devote tons of time but I'm happy to help if I can.

I'm not familiar with DocFetcher but I'm pretty confident that the answer is "yes". Where are you placing these index markers? Just in the file titles? If so then yes, that's definitely possible to retain.

Indeed, if I correct my code to actually deal with whole words instead of substrings (my bad!). But yes, one should be able to run against the master list as many times as you want and correct the files even after the fact (with some slight modification). Alex sent me his word replacement list and it's going to be super helpful.

Attachments

Last edited:

davejonescue

Puritan Board Junior

In regards to the multiple spelling of words that could be different words, like "reed could mean read, but could also be reed" I have found a quite useful list online of "homophones." We could use this as a base for exclusion of the "master-list" thus potentially doing away with the majority of possible mishaps regarding non-standardized spelling. If we simply do not put these words, or those words, even misspelled but are one or the other, then all other words should be, if I am not mistaken, appropriate corrections.

Then, at a future time, when editing the texts, one can create a macro (or a script) to highlight the homophones and correct them in context (apart from this project.)

Then, at a future time, when editing the texts, one can create a macro (or a script) to highlight the homophones and correct them in context (apart from this project.)

Logan

Puritan Board Graduate

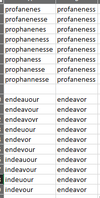

I have my scripts mostly ready for the full list so I'm showing a sample of the result here. Keep in mind that all of this is automated (i.e., just provide the URL, hit the button, and about 30 seconds later you have this result). There are some more word substitutions to add to the list but all in all I'm very pleased with the result.

Before:

After:

Now to add automatic epub generation to the process.

Before:

After:

Now to add automatic epub generation to the process.

Last edited:

Logan

Puritan Board Graduate

Please do Edward Leigh’s Body of Divinity for y’all’s first project.

Here's an initial pass as an epub.

The list of word replacements Alex had was over 7000. The more variant spellings we add to that to get replaced, the better the end result will be but already I think it's a terrific improvement.

Attachments

davejonescue

Puritan Board Junior

This is truly amazing brother, and honestly I do not know how to thank you. On behalf of the entire Christian community I thank you. You have taken a process that seemed so burdensome and daunting and made it a reality. And I want to think Alex who donated the word list. This process is going to singlehandedly transform these texts into almost perfectly readable resources. For many, the ability to carry 5,000+ Puritan/Non-Conformist resources on their phones, in E-readers, tablets, etc. will do away with having to hunt these texts down, spend tons of money on physical books, or being privy to academic subscriptions to view the facsimiles. I do not think I am over-exaggerating. What you have done via your scripts will undoubtedly have a huge impact to the proliferation of Puritan literature not only in America, but to the world. So often, on the Reformed used book sites on Facebook, people in undeveloped countries would beg to be sent Reformed and Puritan literature; treasuring what many of us take for granted; but we all know the shipping cost to places like Africa and India. With the conversion of these to Epubs, one will be able to send the entire corpus with a few emails, free of charge.I have my scripts mostly ready for the full list so I'm showing a sample of the result here. Keep in mind that all of this is automated (i.e., just provide the URL, hit the button, and about 30 seconds later you have this result). There are some more word substitutions to add to the list but all in all I'm very pleased with the result.

Before:

View attachment 9579

After:

View attachment 9581

Now to add automatic epub generation to the process.

This will also allow, via DocFetcher portable, to create a searchable Puritan index that people can take overseas (or use at home) on a single flash-drive; which in essence, is creating a no-cost Puritan Commentary on Scripture. Again, I really believe this work is going to have a ripple effect throughout entire Christendom, and, it comes at the cusp of curiosity of Puritan literature becoming more prevalent everyday. Please, let me know what ever I can do; whether it be working on the "master-list," downloading, converting, you name it. This is a dream come true. Glory be to God, and praise Jesus. This is amazing.

Last edited:

davejonescue

Puritan Board Junior

Hey Logan, sorry for the above quote dont know how to tag you any other way. I was online last night and found this. Dont know if it will be helpful to you when it is time to start further working on the "master-list." Still churning away at tagging the author with the works in Zotero, but I will have the next two days off so I should get most of them done then. Below is a link to a script for "removing duplicate words in a list." This may further automate the process of creating the master-list by easily removing the duplicates if it is not done in the official collecting of misspelled words. Talk to you later, and hope you are having a great day.Try CSV and see what that gives you.

GitHub - the-c0d3r/remove-duplicate: A simple script to remove duplicate words in wordlists.

A simple script to remove duplicate words in wordlists. - GitHub - the-c0d3r/remove-duplicate: A simple script to remove duplicate words in wordlists.

Logan

Puritan Board Graduate

Below is a link to a script for "removing duplicate words in a list." This may further automate the process of creating the master-list by easily removing the duplicates if it is not done in the official collecting of misspelled words.

Thanks Dave, I did actually have a plan already for removing duplicate words. The initial pass is going to be messy because there will be a ton of "words" that aren't in the dictionary. Some will be Latin, some will be partial words (e.g., words with missing characters noted, "un*versal" becomes "un" and "versal")

So it's going to take a lot of culling. But definitely possible. I was going to try to create that script right now actually.

In the meantime I've incorporated the ability to output:

- Clean markup files (html)

- Microsoft Word .docx files

- epubs (with stylesheets for reasonably good formatting)

- Typeset (kerned) PDFs

davejonescue

Puritan Board Junior

Wow, this is awesome. Those Word docs are really going to come in handy; because once this project is complete, I am going to go back and redo the entire Logos Puritan Index with the corrected texts, and the Personal Books must be done in DOCX. This will magnify the results exponentially. As always, let me know if you need anything, or for me to do anything; and again thank you so much for this.Thanks Dave, I did actually have a plan already for removing duplicate words. The initial pass is going to be messy because there will be a ton of "words" that aren't in the dictionary. Some will be Latin, some will be partial words (e.g., words with missing characters noted, "un*versal" becomes "un" and "versal")

So it's going to take a lot of culling. But definitely possible. I was going to try to create that script right now actually.

In the meantime I've incorporated the ability to output:

- Clean markup files (html)

- Microsoft Word .docx files

- epubs (with stylesheets for reasonably good formatting)

- Typeset (kerned) PDFs

This is amazing.I have my scripts mostly ready for the full list so I'm showing a sample of the result here. Keep in mind that all of this is automated (i.e., just provide the URL, hit the button, and about 30 seconds later you have this result). There are some more word substitutions to add to the list but all in all I'm very pleased with the result.

davejonescue

Puritan Board Junior

Of what I understand, there is a scope to the project, and that is:Dave, Logan and others... Hopefully a feedback mechanism (email?) can be established to report other archaic spellings, errors and so on for future iterations. This a wonderful project. Thank you.

1. Downloading all the Puritan/Non-Conformist works from EEBO-TCP

2. Running all the works through an automated process to find the collective misspelling in the entire corpus.

3. Create a "master-list" of words to be corrected.

4. Run all the works through the corrective process using the "master-list" created.

5. Create a PDF, DOCX, EPUB, and HTML file of all the works.

6. Put all those works into the Public Domain for universal access and use.

7. Project Complete.

From there individuals will be able to create their own projects, publications, editing, collections , etc. Also, we have to clarify what is considered "archaic." I think it has been decided we are not going to try and replace words like "sitteth," or "standeth," because that will open a can of worms of having to define the archaic spelling in context, the same way with "thee's" and "thou's." They can mean different things in different contexts. But say there is an archaic spelling like "kick'd" it can be replaced with "kicked," or "odour" with "odor." Basically if there is a contemporary spelling that isnt dependent on context for clarification, it can be corrected. The same goes for homophones. They will be left uncorrected because they can worsen the text by correction instead of giving a correct understanding of the point even if spelled incorrectly in the text. So, the main time spent on the project, since Logan has created script to automate the entire process, and Alex has provided a correction list of a momentous 7,000 words, is really building on that corrective "master-list." If we can run every work through a script that will list the misspelled words, get rid of the duplicates, and provide an alternative for what remains (which will take weeding through 1,000's and 1,000's of misspelled words) then we should be able to, with that list in hand, simply run the Corpus through and automate the process of providing the corrected text in the above formats. After that, people will be able to take on the task of deciding if they want to go through each one and format them perfectly, format them for publishing in physical books, creating better E-books, or building websites with the HTML's. I only envision a single website (at least from me) that has an option to download each work in the outputs available, to do with it as they see fit, as EEBO-TCP did with us to make this possible.

This is so that this isnt a perpetual project for the people now involved, but can be a Christian community event looking at it as one step closer to the goal of complete correction and the collection of a complete Puritan Corpus, but not meeting that goal completely if that makes sense. Someone has already inquired about setting up an Amazon direct publishing thing for the creation of physical books from these works. And my response would be that would be up to them. It is not something that I desire to do, but if they want to, that is fine; if they want to take the time to format and edit the work for publication, it would have been my pleasure to play a small part in making that process easier and to contribute to the further proliferation of Puritan works into the hands of Christians. And this would go for any work, anyone decides to do. And I think that is the heart of everyone involved, even if they have separate and personal plans for the works once they are completed. Basically to put them through this process, put them in the public domain, and put it in the hands of people to do as they will; while also having the opportunity to be freely offered to the world by them being in the public domain in these output formats; with an improved readability rate that everyone can benefit from the consumption of Puritan literature, regardless of income or region.

So basically, if I am not mistaken, though I could be wrong, this isnt going to be an ongoing perpetual project, but a full-effort initial project, with a simple goal in mind; to automate the process of correcting the EEBO-TCP Puritan/Non-Conformist catalogue only as far as automation allows. I dont think any of us wanted to become publishers (again, I could be wrong) or full-time editors. But I really believe this project, as is, is going to improve the readability of these texts to such a degree, that most if not all will feel just as comfortable reading these texts, as they would a book from the store, or an e-book from an online publisher; even with the errors.

No automation is going to replace human editing of going over each text and formatting it and correcting it by hand. The goal of this project is to remove as much of that process as automation can do, and leave that process as the only thing remaining between an imperfect and perfect document. Not only will it take the brunt off of future editors and publishers, but in the meantime, it will make reading the imperfect documents immensely better and beneficial. With the limited amount of Puritan Publishers, and the 1,000's of Puritan documents by 100's of Puritan authors; the time period to a perfectly published alternative may be quite long. The hope of this is that people would only have to struggle to find the time to read all of the works available; and not struggle to find readable documents of the works to do so.

In His Service,

For His Bride,

Soli Deo Gloria

Last edited:

Logan

Puritan Board Graduate

Dave, Logan and others... Hopefully a feedback mechanism (email?) can be established to report other archaic spellings, errors and so on for future iterations. This a wonderful project. Thank you.

I've written another script that collates all the unique, potentially non-standardly spelled words from the body of files and I'll go through those and add those to the master list. So at the end of the day there should be (almost) zero whole words that lack correction, but I'm sure there will always be a few. I've already run it and added another thousand words to Alex's list. Once I have more files I'll run it again and make the list better.

But if others are found, I'd certainly be happy to run it again and I'm certainly happy to make all my scripts and the master list available to anyone who wants to use it.

Logan

Puritan Board Graduate

Please do Edward Leigh’s Body of Divinity for y’all’s first project.

We have over 10,000 replacement words in the database now and I ran Leigh's Body of Divinity through it again and the results looked pretty good.

Also, all the footnote links are preserved in the document

I uploaded the generated PDF, .docx, and .epub for the interested.

These were downloaded, cleaned, and converted (all automated) from this source:

Dropbox - File Deleted - Simplify your life

Last edited:

davejonescue

Puritan Board Junior

Brother I am with you too. Going through 5,000 docs and shortening titles, oh, it seems so tedious. But it is that slow and steady keeping the goal in mind. Remember, your expertise in scripting-automation has literally shaved years and years off of this process, in fact making an almost impossible task come into the realm of not only possibility, but inches from actuality. It is OK to take it easy, and be like the tortious instead of the hare. Your work here cannot be measured in its importance, and I will be praying for your strength and patience as you progress. Right now, I am trying to have the Puritan Conference on one screen, while doing the titles/authors on the other (I love a duel screen set-up.) But the former seems to be winning at the moment, lol. But again, I believe this will have a ripple effect through the kingdom; especially as inquiry into Puritanism is picking up steam. What you are doing is momentous, and God is allowing you to head a project that is not only going to "standardize" an entire genre of literature (meaning make it accessible,) for the general Christian reader; whom some regard this period to be the greatest era of Church History theologically and devotionally, but also redefine how scholars, pastors, and laymen research the Puritans once these works are put into a searchable Index like Logos or DocFetcher. Please let me know how I can help, and if that word list gets to be tiresome, save some for me when I get done doing these titles/authors, and I will work on it so you can have a break for a while. Thank you so much for all you do for His Bride, and for His Glory.

Last edited:

- Status

- Not open for further replies.

Similar threads

- Replies

- 2

- Views

- 2K

- Replies

- 0

- Views

- 3K

- Replies

- 21

- Views

- 3K

- Replies

- 13

- Views

- 12K

- Replies

- 0

- Views

- 499